Building Resilience to Misinformation: An Instructional Toolkit

Are misinformation interventions different or fundamentally the same?

by Heather Ganshorn on January 21st, 2025 | 0 CommentsOn the London School of Economics blog, National University of Singapore professor Ozan Kuru argues for a more coordinated approach to misinformation interventions, and less emphasis on small differences between them.

The Stanford Internet Observatory is being dismantled

by Paul Pival on June 18th, 2024 | 0 CommentsAs reported by The Platformer and The Washington Post, it seems Stanford is unwilling to continue funding (lawsuits against) this excellent research group.

The Disinformation Machine: How Susceptible Are We to AI Propaganda?

by Paul Pival on May 23rd, 2024 | 0 CommentsFrom Stanford's Human-Centered Artificial Intelligence Lab, The Disinformation Machine: How Susceptible Are We to AI Propaganda? According to the linked articles, we're pretty susceptible :-(

“Deepfakes are probably more persuasive than text, more likely to go viral, and probably possess greater plausibility than a single written paragraph,” he said. “I’m extremely worried about what’s coming up with video and audio.”

Disruptions on the Horizon (Policy Horizons Canada)

by Paul Pival on May 23rd, 2024 | 0 CommentsA recently-released report from Policy Horizons Canada, Disruptions on the Horizon, "identifies and assesses 35 disruptions for which Canada may need to prepare and explores some of the interconnections between them." Number 1 on the list is:

People cannot tell what is true and what is not

The information ecosystem is flooded with human- and Artificial Intelligence (AI)-generated content. Mis- and disinformation make it almost impossible to know what is fake or real. It is much harder to know what or who to trust.

More powerful generative AI tools, declining trust in traditional knowledge sources, and algorithms designed for emotional engagement rather than factual reporting could increase distrust and social fragmentation. More people may live in separate realities shaped by their personalized media and information ecosystems. These realities could become hotbeds of disinformation, be characterized by incompatible and competing narratives, and form the basis of fault lines in society. Research and the creation of scientific evidence could become increasingly difficult. Public decision making could be compromised as institutions struggle to effectively communicate key messaging on education, public health, research, and government information.

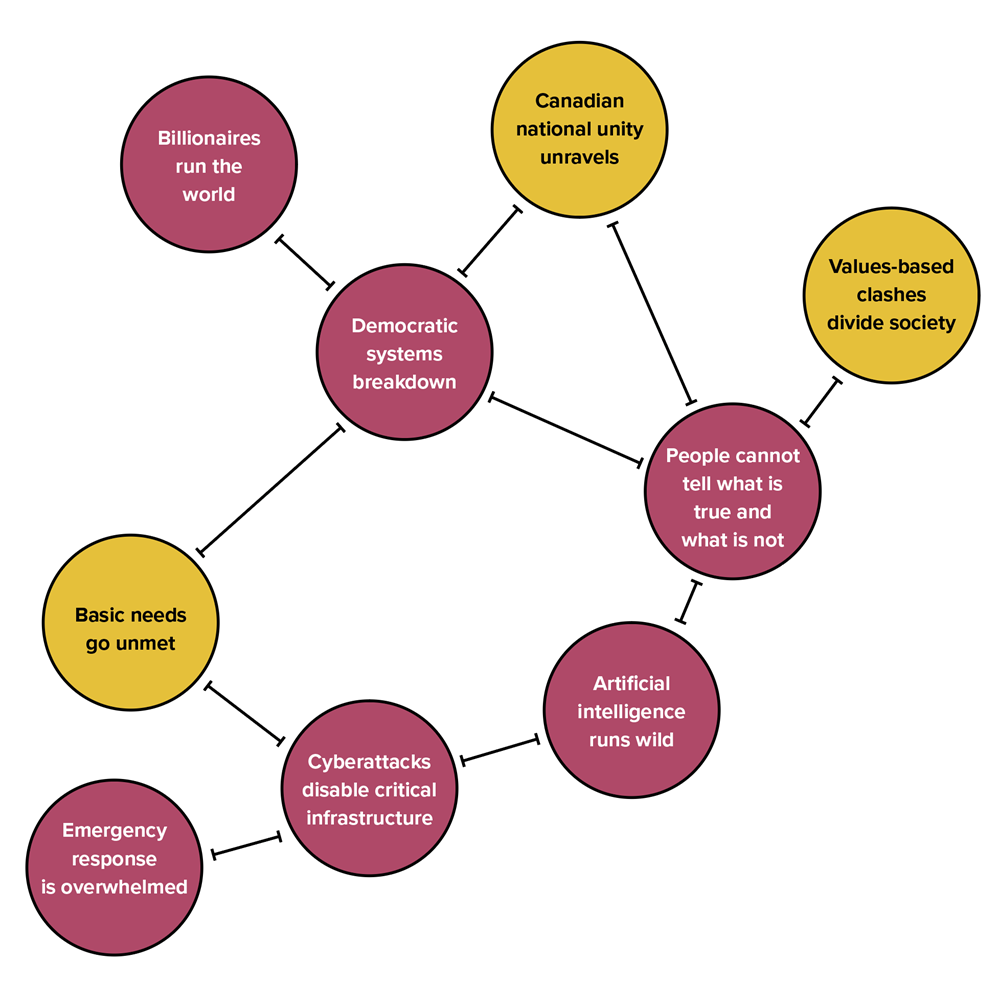

They also include a diagram showing how interconnected this particular risk is to many others:

Search this Blog

Recent Posts

Are misinformation interventions different or fundamentally the same?

The Stanford Internet Observatory is being dismantled

The Disinformation Machine: How Susceptible Are We to AI Propaganda?

Disruptions on the Horizon (Policy Horizons Canada)

Subscribe

Enter your e-mail address to receive notifications of new posts by e-mail.

Archive

- Last Updated: Jan 29, 2025 1:18 PM

- URL: https://libguides.ucalgary.ca/c.php?g=731350&p=5339416

- Print Page Print Page